프로메테우스를 설치하기 앞서 네임 스페이스 정의

#monitoring namespace 정의

kind: Namespace

apiVersion: v1

metadata:

name: monitoring네임 스페이스의 이름을 변경해도 큰 문제는 없으나 앞으로 소개할 코드에서 네임 스페이스를 같은 것으로 변경 해야 함

프로메테우스의 cluster role을 정의

#프로메테우스의 k8s api 접근 권한

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: prometheus

namespace: monitoring

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: default

namespace: monitoring #namespace 변경시 변경 필요

프로메테우스 이미지를 포함한 deployment

#프로메테우스 이미지를 가진 deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus-deployment

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: prometheus-server

template:

metadata:

labels:

app: prometheus-server

spec:

containers:

- name: prometheus

image: prom/prometheus:latest

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus/"

ports:

- containerPort: 9090

volumeMounts:

- name: prometheus-config-volume

mountPath: /etc/prometheus/

- name: prometheus-storage-volume

mountPath: /prometheus/

volumes:

- name: prometheus-config-volume

configMap:

defaultMode: 420

name: prometheus-server-conf

- name: prometheus-storage-volume

emptyDir: {}

각 노드에 node exporter를 배포해 정보를 얻어올 daemonset과 배포된 node exporter에게 연결점을 줄 service 정의

#프로메테우스가 k8s에 정보를 수집하기위해 정의한 node-exporter, daemonset으로 구성

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: monitoring

labels:

k8s-app: node-exporter

spec:

selector:

matchLabels:

k8s-app: node-exporter

template:

metadata:

labels:

k8s-app: node-exporter

spec:

containers:

- image: prom/node-exporter

name: node-exporter

ports:

- containerPort: 9100

protocol: TCP

name: http

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: node-exporter

name: node-exporter

namespace: kube-system

spec:

ports:

- name: http

port: 9100

nodePort: 31672

protocol: TCP

type: NodePort

selector:

k8s-app: node-exporter

프로메테우스의 구성데이터를 설정할 config map

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-server-conf

labels:

name: prometheus-server-conf

namespace: monitoring

data:

prometheus.rules: |-

groups:

- name: container memory alert

rules:

- alert: container memory usage rate is very high( > 55%)

expr: sum(container_memory_working_set_bytes{pod!="", name=""})/ sum (kube_node_status_allocatable_memory_bytes) * 100 > 55

for: 1m

labels:

severity: fatal

annotations:

summary: High Memory Usage on

identifier: ""

description: " Memory Usage: "

- name: container CPU alert

rules:

- alert: container CPU usage rate is very high( > 10%)

expr: sum (rate (container_cpu_usage_seconds_total{pod!=""}[1m])) / sum (machine_cpu_cores) * 100 > 10

for: 1m

labels:

severity: fatal

annotations:

summary: High Cpu Usage

prometheus.yml: |-

global:

scrape_interval: 5s

evaluation_interval: 5s

rule_files:

- /etc/prometheus/prometheus.rules

alerting:

alertmanagers:

- scheme: http

static_configs:

- targets:

- "alertmanager.monitoring.svc:9093"

scrape_configs:

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-nodes'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'kube-state-metrics'

static_configs:

- targets: ['kube-state-metrics.kube-system.svc.cluster.local:8080']

- job_name: 'kubernetes-cadvisor'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'node-ex' #노드익스포터를 연결해주는 작업

scrape_interval: 5s #노드익스포터에서 정보를 가져오는 주기

static_configs:

- targets:

- '10.233.125.11:9100' #노드익스포터의 pod ip:port 번호를 기입

- '10.233.98.16:9100'alert 기능이 정의되어 있으나 현재 cluster에는 적용하지 않음

추후 적용하여 업데이트 예정

프로메테우스의 연결점을 만들어 줄 service

#프로메테우스를 외부로 노출시키기 위한 서비스

apiVersion: v1

kind: Service

metadata:

name: prometheus-service

namespace: monitoring

annotations:

prometheus.io/scrape: 'true'

prometheus.io/port: '9090'

spec:

selector:

app: prometheus-server

type: NodePort

ports:

- port: 8080

targetPort: 9090

nodePort: 30003이 단계에서 사용중인 vm의 ip주소와 node port 번호를 사용하여 프로메테우스에서 제공하는 UI로 접근 가능

이것으로 프로메테우스의 준비는 끝났고, 이제 노드 정보가 아닌 클러스터의 정보를 얻기 위해 kube state metrics를 정의

kube state metrics의 경우 네임 스페이스가 kube-system으로 이미 존재하는 네임 스페이스로 들어가니 선언이나 변경 불필요

kube state metrics의 cluster role과 binding을 정의

#윗단에서 cluster role binding, 아랫단에서 cluster role을 정의

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kube-state-metrics

rules:

- apiGroups:

- ""

resources: ["configmaps", "secrets", "nodes", "pods", "services", "resourcequotas", "replicationcontrollers", "limitranges", "persistentvolumeclaims", "persistentvolumes", "namespaces", "endpoints"]

verbs: ["list","watch"]

- apiGroups:

- extensions

resources: ["daemonsets", "deployments", "replicasets", "ingresses"]

verbs: ["list", "watch"]

- apiGroups:

- apps

resources: ["statefulsets", "daemonsets", "deployments", "replicasets"]

verbs: ["list", "watch"]

- apiGroups:

- batch

resources: ["cronjobs", "jobs"]

verbs: ["list", "watch"]

- apiGroups:

- autoscaling

resources: ["horizontalpodautoscalers"]

verbs: ["list", "watch"]

- apiGroups:

- authentication.k8s.io

resources: ["tokenreviews"]

verbs: ["create"]

- apiGroups:

- authorization.k8s.io

resources: ["subjectaccessreviews"]

verbs: ["create"]

- apiGroups:

- policy

resources: ["poddisruptionbudgets"]

verbs: ["list", "watch"]

- apiGroups:

- certificates.k8s.io

resources: ["certificatesigningrequests"]

verbs: ["list", "watch"]

- apiGroups:

- storage.k8s.io

resources: ["storageclasses", "volumeattachments"]

verbs: ["list", "watch"]

- apiGroups:

- admissionregistration.k8s.io

resources: ["mutatingwebhookconfigurations", "validatingwebhookconfigurations"]

verbs: ["list", "watch"]

- apiGroups:

- networking.k8s.io

resources: ["networkpolicies"]

verbs: ["list", "watch"]

cluster role과 연동될 service account 정의

# kube-state-metics-svcaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-state-metrics

namespace: kube-system

kube state metics의 이미지를 가진 deployment

# kube-state-metics-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: kube-state-metrics

name: kube-state-metrics

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: kube-state-metrics

template:

metadata:

labels:

app: kube-state-metrics

spec:

containers:

- image: quay.io/coreos/kube-state-metrics:v1.8.0

livenessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

name: kube-state-metrics

ports:

- containerPort: 8080

name: http-metrics

- containerPort: 8081

name: telemetry

readinessProbe:

httpGet:

path: /

port: 8081

initialDelaySeconds: 5

timeoutSeconds: 5

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: kube-state-metrics

kube state metrics의 연결점인 service 구성

apiVersion: v1

kind: Service

metadata:

labels:

app: kube-state-metrics

name: kube-state-metrics

namespace: kube-system

spec:

clusterIP: None

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

- name: telemetry

port: 8081

targetPort: telemetry

selector:

app: kube-state-metrics

이것으로 kube state metrics의 배포도 끝

grafana와 연결하여 시각화

grafana의 이미지를 가진 deployment와 외부로 노출시킬 service를 구성

# 윗단이 deployment, 아랫단이 service 정의

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

name: grafana

labels:

app: grafana

spec:

containers:

- name: grafana

image: grafana/grafana:latest

ports:

- name: grafana

containerPort: 3000

env:

- name: GF_SERVER_HTTP_PORT

value: "3000"

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

value: /

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: monitoring

annotations:

prometheus.io/scrape: 'true'

prometheus.io/port: '3000'

spec:

selector:

app: grafana

type: NodePort

ports:

- port: 3000

targetPort: 3000

nodePort: 30004

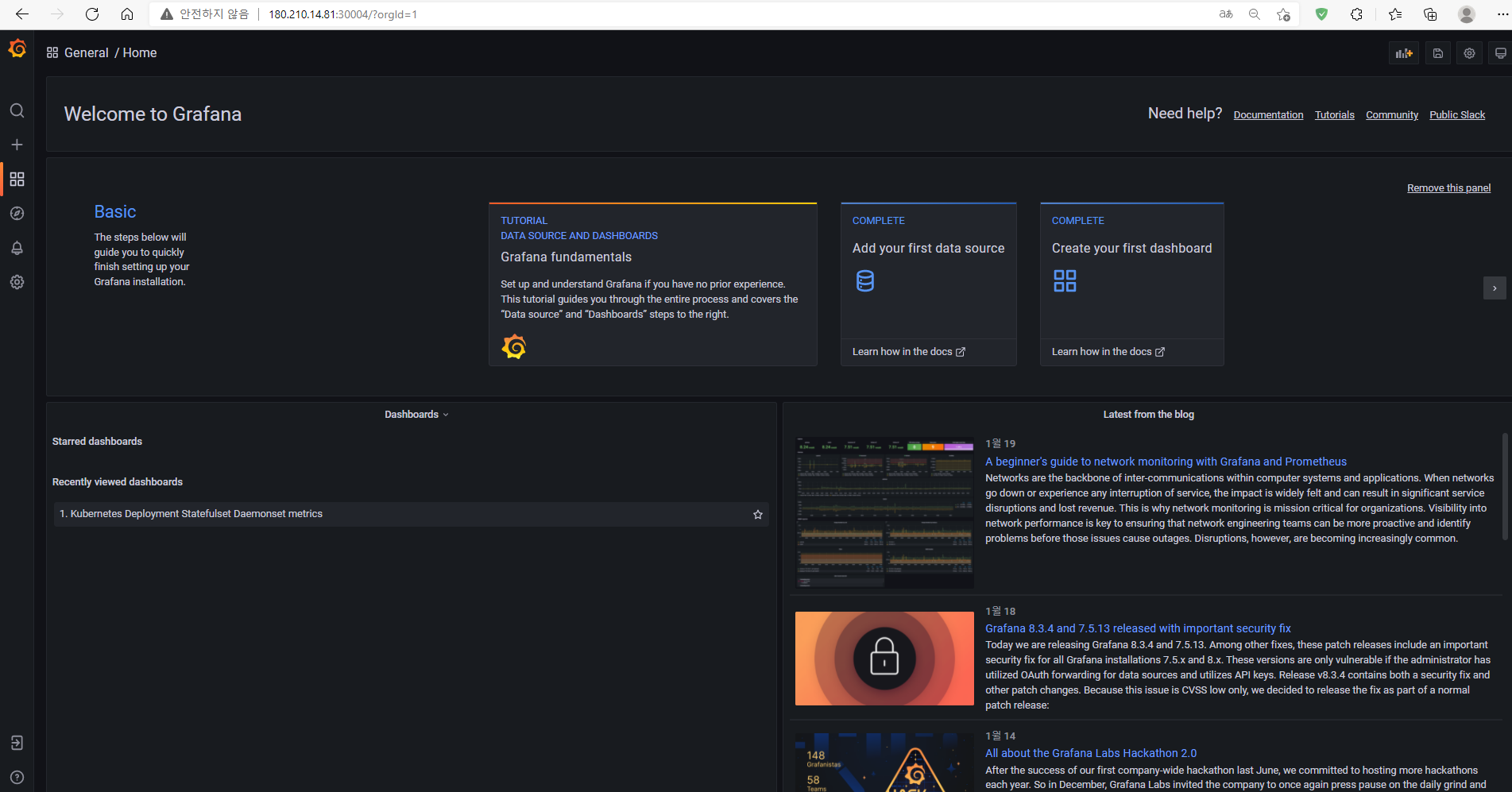

이제 서비스에서 설정한 nodeport를 통해 접속이 가능

사진처럼 vm의 IP와 nodeport 번호를 연결해 접속

configuration에서 data sources를 통해 프로메테우스와 연결

add data source로 접속해 프로메테우스를 선택

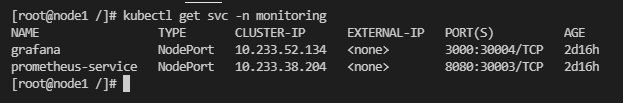

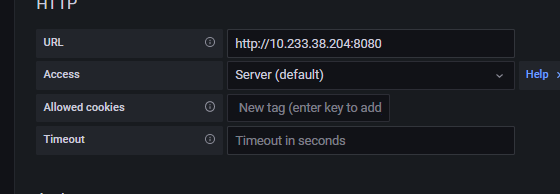

여기서 URL에 들어갈 정보가 필요

vm이 실행중인 터미널에서 서비스 정보를 불러와 cluster IP와 PORT 번호를 확인할 수 있음

그림의 형태로 삽입

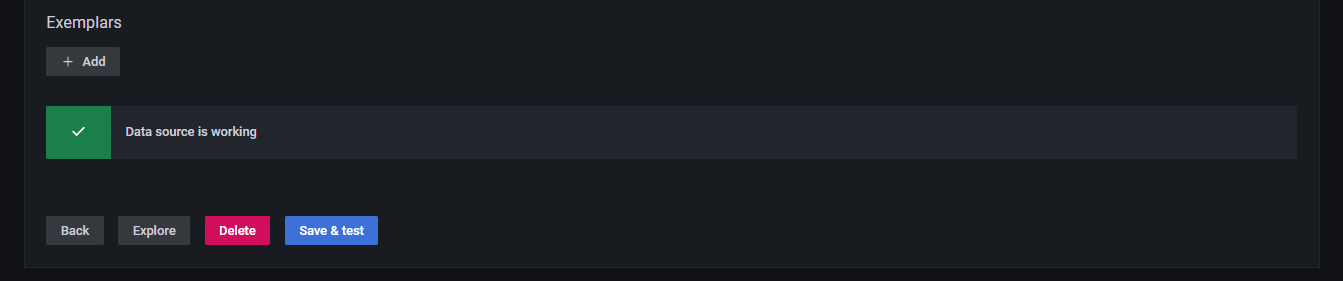

save & test를 눌러 그림처럼 data source is working이 뜬다면 성공

그러나 아직 시각화를 위한 dashboard가 준비되지 않음

직접구성해도 좋지만 남이 구성한 것을 가져다 쓰는 것도 좋음

해당 URL에서 여러 대시보드를 배포하고 있으니 사용

대시보드가 준비됬다면 import를 통해 적용 가능

해당 예제에서는 8588번을 사용해 구성

load를 눌러 결과를 확인하면

메모리, CPU, 현재 사용중인 pod들을 확인 할 수 있음

'Kubernetes' 카테고리의 다른 글

| Kubefed (0) | 2024.05.23 |

|---|---|

| Kubernetes EFK (0) | 2024.05.23 |

| Kubesphere Federation (0) | 2024.05.23 |

| Kubekey를 사용한 Kubernetes, Kubesphere 배포 (0) | 2024.05.23 |

| Managed K8s & On premise K8s (0) | 2024.05.23 |